Building a high-available file storage with nginx, haproxy and lsyncd

In my last blogpost, I described our hosting setup for pretix.eu in detail and talked about the efforts we take to achieve a resistance against failing servers: The system should tolerate the failure of any single server at any given time and keep running. Well, up to now, this was only nearly true.

Out of our 10 servers, one wasn’t allowed to go down and required manual failover operations if it did: Our first application worker node. This is because we stored all user-uploaded and runtime-generated files on the disk of this server and then mounted it on the other servers via NFS. Since NFS is pretty annoying, this caused a lot of trouble whenever that server went down (which luckily did not happen often).

We knew that this could only be a temporary solution from the beginning but it took us more than a year to find a better one that satisfied our wishes.

Our problem

The data store we are looking at is used to store the following types of files:

- Tickets (PDFs, Passbook files)

- Invoices

- Temporary files (e.g. generated exports)

- Event logos and product pictures

- Generated CSS files

- User-uploaded files (e.g. when asking for a photo during registration)

Django calls these files media files and by default stores them to the disk of the server executing the Django code. This is not feasible for us, since our code is currently running on four servers and all of them need to be able to access the files immediately after upload: If you create a file with one request, there is no guarantee that you end up at the same server with your next request that might use the file you just uploaded.

Therefore, we need a shared storage for the files. A simple NFS-like share is not enough, since we want to stay in operation whenever a single server goes down. Therefore, we need some kind of replicated storage. As we all know, replication is hard.

Taking a closer look at these types of files, though, they have some interesting properties:

- They’re all rather small in size.

- Their file names do not matter.

- They never change (or if they do, it’s fine to assign them a new name).

- Their total size is small enough to store them all on a single disk.

- They’re not absolutely essential to our operation. If we lose them, it’s bad, but not as bad as our SQL database.

- Many of them can or must be deleted after a while, but deletion does not need to happen instantly and is allowed to fail temporarily in case of outages.

From a theoretical point of view, these constraints make replication a much easier problem than the generic problem of replicated file storages, since we can use these principles to avoid any conflicts before they occur. It shouldn’t require a complex distributed solution to achieve this (or so we thought). So what options did we have?

Cloud storage

The industry standard for this kind of problem is using Amazon S3. Nobody really talks about this kind of problem (unfortunately), but just goes for S3 or a competitive solution of the same kind. However, we’re self-hosting our service with a local provider instead of a large cloud provider mostly for political (and also financial) reasons. Giving that up would be a big step for us and therefore not something we wanted to do for something “simple” like this.

Object storage software

There is a number of tools that allow you to host a S3-compatible object storage yourself, e.g. Ceph or Minio. Both of these looked very attractive to us at first and we looked at them in detail, especially at Minio. However, since they do solve a more generic problem than the one we have, they require at least three or four nodes in order to achieve a high-availability setup, while with our constraints, the problem should be solvable with two nodes. Additionally, it’s not possible to change or grow a Minio cluster after the fact without re-creating it from scratch which made us hesistant to choose it since we don’t really know our future storage needs.

File-system/block-device replication

There are well-established solutions for sharing a file-system in a peer-to-peer-fashion, like GlusterFS on a file-system level or DRBD on a block-device level. Again, those tools have been created for a very generic, hard problem and contain years of engineering to get it right. The result is a technology that feels way to complex to use and maintain for our usecase. We wanted something we can debug and fix ourselves in case something goes horribly wrong.

Push after save

Some people proposed to us that we should just solve this by calling rsync/sftp/… to the other servers from Django every time after we created a file. We felt that this feels hacky since it is hard to solve problems like deletion, catching up after a downtime, and it sounds like it scales really badly with the number of worker nodes.

Our solution

I discussed this problem with some persons at DjangoCon Europe 2016 and started writing an experimental replicating file server on the way back from that conference, but that turned out to be a bad idea either (who would have thought…).

We finally settled for a solution that only contains well-known standard software and a lot of configuration for them. For lack of a better word, we call our file storage a CDN. In fact, it could probably very easily be expanded to be a “real” CDN with nodes distributed over the globe, should we ever feel the need to do so. Except for the Django integration, we did not write a single line of custom code to make this work. Using only haproxy, nginx, lsyncd and rsync, we end up with the following system properties:

- If a file is pushed to the CDN, it can instantly be retrieved again, as long as the node that handled the push operation has not crashed in the meantime.

- If a node crashes, all files created on this node older than 5 seconds can still be retrieved.

- If a node crashes, upload is still transparently possible to the remaining node.

- Deleting a file is possible whenever the node on which the file was created is available. The deletion will be synced to the other nodes.

- When a crashed node returns, it catches up with newly added files quickly.

Sounds great, doesn’t it?

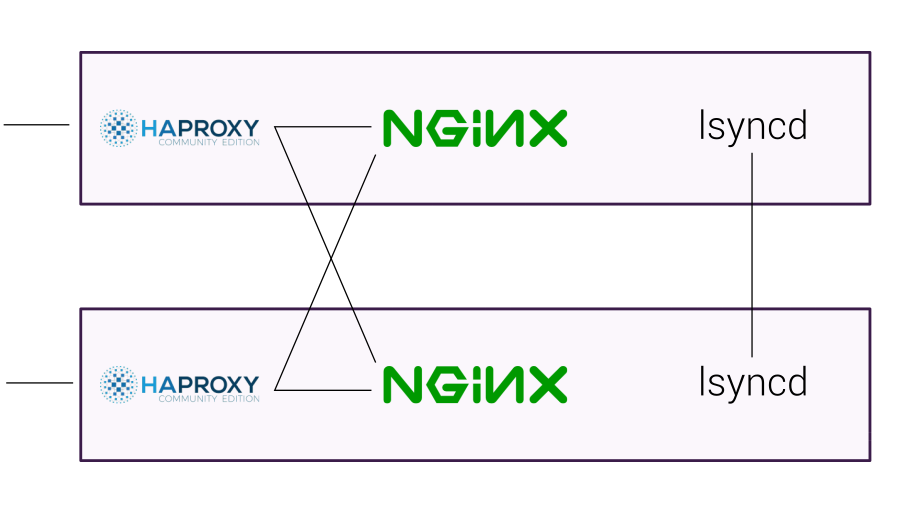

To allow this, haproxy sits in front on every server and decides if you’ll be served directly on this server or will be redirected to another node. nginx then does the actual file serving and uploading, while lsyncd and rsync ensure quick replication.

Overview and API

At a high level, the system looks like this:

Our CDN domain cdn.pretix.space has A and AAAA records set for both servers.

A consumer can use the CDN using a very simple HTTP interface. For example putting a simple text file can be done using

$ curl -v -X PUT -d 'Hello world' \

https://cdn:***@cdn.pretix.space/upload/pub/filename.txt

> PUT /upload/pub/filename.txt HTTP/1.1

> Content-Length: 11

> …

< HTTP/1.1 201 Created

< Server: nginx

< Date: Tue, 20 Mar 2018 17:17:50 GMT

< Location: /1/pub/filename.txt

Some headers have been removed in the examples for brevity. The Location header of the response gives the

path of the created file, in this case /1/pub/filename.txt.

We can then instantly retrieve the file again like this:

$ curl https://cdn.pretix.space/1/pub/filename.txt

Since we used the /pub/ prefix in the filename, we can get it without authentication, with /priv/ we would

need authentication for GET as well. Deletion is just as simple:

$ curl -X DELETE https://cdn:***@cdn.pretix.space/1/pub/filename.txt

So let’s dive into how it works on the server side!

Before we do so, a short disclaimer: This is not intended to be a tutorial that can be followed 1:1. The configuration I show here is not complete and many thinks are very specific to our setup and how we generate the configuration using Ansible. I also do not want to be understood as endorsing this as the best or only way to do this – it just works for us. This is therefore intended as an inspiration on what can be done with this software and a template to follow if you want to build something similar.

We also use some bleeding-edge software here: Some of the settings we use require haproxy 1.8 and nginx 1.13.4 or newer, which is not something you get from official Debian repositories ;)

Upload

In the frontend layer of our haproxy configuration, we can detect an upload request by the fact that it uses the PUT

method and goes to the /upload/ subpath. We can use this to route the requests to a specific upload backend:

acl generic_upload path_beg /upload/

use_backend nanocdn_upload if METH_PUT generic_upload

In this backend, we make both the local nginx server as well as the ones on other nodes available as upsteam servers and

let it to haproxy to decide which to. Using health checks and option redispatch, we can be pretty sure our PUT

operation will succeed even if some nodes are down. The backend config then looks like this:

backend nanocdn_upload

log global

mode http

# Healthcheck

option httpchk GET /check/ HTTP/1.1\r\nHost:cdn.pretix.space

http-check expect status 200

default-server inter 1s fall 5 rise 2

balance roundrobin

timeout check 3s

timeout server 30s

timeout connect 10s

option redispatch

retries 2

http-response set-header Location /%s/%[capture.req.uri,regsub(^/upload/,)]

server 1 188.68.52.34:13080 check

server 2 37.120.177.158:13080 check

As you can see, it adds the Location header to the response, replacing the /upload/ segment of the path with the

number of the server it actually talked to. The server also know its node number and does the same path rewriting. This way,

the first portion of the path will always tell which node created a specific file. The counterpart nginx config makes

use of nginx’ webdav support for storing the file:

location ~ ^/upload/(pub|priv)/(.*)$ {

alias /var/nanocdn/files/1/$1/$2;

client_body_temp_path /var/nanocdn/tmp;

create_full_put_path on;

client_max_body_size 1G;

dav_methods PUT;

dav_access user:r;

auth_basic "private";

auth_basic_user_file /etc/nginx/cdn.htpasswd;

}

Even though we set the access rights to user:r, nginx unfortunately sets them to u+rw, making it possible to replace the file

with different content later, which we don’t want. Therefore, we hack our way around nginx’ rewrite module to achieve this:

if (-f $request_filename) {

set $deny "A";

}

if ($request_method = PUT) {

set $deny "${deny}B";

}

# Do not allow PUT to a file that already exists,

# return a conflict error instead.

if ($deny = AB) {

return 409;

}

Retrieval and deletion

For retrieving a file, we make use of the creation node we encoded into the file path and try to get the file from that node. This way, we can make sure to get the file even if it was created just now and has not yet been synced to a different node. Should the creation node be offline, though, we use the other nodes as a backup. In the haproxy frontend, we insert an ACL for every node:

acl known_node_1 path_beg /1/

use_backend nanocdn_retrieve_1 if known_node_1 !METH_PUT

!generic_upload

And create a different backend for each of the nodes:

backend nanocdn_retrieve_1

# as before …

http-request cache-use nanocdn_cache

http-response cache-store nanocdn_cache

server pretix-cdn1 188.68.52.34:13080 check

server pretix-cdn2 37.120.177.158:13080 backup check

As you can see, pretix-cdn2 is configured as a backup here and we make use of the caching features added in haproxy 1.8. On the nginx

side, every node has a configuration block to serve their own files:

location ~ ^/1/pub/ {

root /var/nanocdn/files;

expires 7d;

dav_methods DELETE;

limit_except GET {

auth_basic "private";

auth_basic_user_file /etc/nginx/cdn.htpasswd;

}

}

Again, we use the WebDAV module to allow deleting the files created on this node directly on this node’s filesystem. There is also a configuration block to serve files created on other nodes that does not allow deletion:

location ~ ^/[^/]+/pub/ {

root /var/nanocdn/files;

error_page 404 =503 /404_as_503.html;

}

You can also see that for files created by other nodes, we never return an error 404, but an error 503 instead: If a node goes down and someone asks us for a file created by that node, we cannot know if it doesn’t exist or if we just haven’t received it yet.

There are similar blocks for the /priv/ sub-paths that just handle access control differently.

Replication

We still need to get newly created files to all nodes. Running a full recursive rsync command on all data might become too slow, so we only do this as a cronjob every now and then in case we missed something. For the real-time synchronization, we use lsyncd to watch the local storage using inotify, aggregate all detected changes over 5 seconds and then issue a rsync command with only the changed directories. The lsyncd config file looks like this:

sync {

default.rsync,

source = "/var/nanocdn/files/1",

target = "37.120.177.158:1",

delay = 5,

rsync = {

protect_args = false

}

}

We disable protect_args because we use rrsync as the user’s remote shell and

rrsync does not allow the -s option.

This way, we get blazingly fast replication between our servers that automatically catches up after a downtime. Since lsyncd and the cronjob

run rsync with the --delete flag, deletions get replicated just as well. There can be no conflicts, as for every file path there is only

one specific node allowed to create or delete it.

Legacy files

Unfortunately, we built this just now and not two years ago, so we have a large number of files that already exist and that do not have a node number in their path. Since it wasn’t feasible to change their path and all references to them, we needed to add a special case. We rsync’d them to both new nodes and added a special case for them in the configuration. In haproxy, we route all requests not specific to a node to a specific backend:

backend nanocdn_retrieve_legacy

# … as before

server pretix-cdn1 188.68.52.34:13080 check

server pretix-cdn2 37.120.177.158:13080 check

As you can see, this just routes the requests to any node that is currently up, since both nodes are guaranteed to have the file – we copied them once and no more files are created in this directory, ever.

However, we also need to delete them and this is where it get’s really dirty. We need to make sure that the DELETE call gets executed on both

nodes, at least when both are up. Since none of the nodes has “authority” on this, we cannot use rsync --delete in a useful way as we do for our new files.

We therefore hack our way around by mirroring all requests to those files to the other node as well with nginx’ brand-new mirror module.

location / {

dav_methods DELETE;

mirror /mirror_pretix-cdn2;

limit_except GET {

auth_basic "private";

auth_basic_user_file /etc/nginx/cdn.htpasswd;

}

root /var/nanocdn/files/legacy;

expires 7d;

}

location /mirror_pretix-cdn2 {

if ($http_x_mirrored) {

return 403;

}

internal;

proxy_pass http://37.120.177.158:13080$request_uri;

proxy_set_header "X-Mirrored" "1";

}

We unfortunately can not put mirror into the limit_except block, leading to extra traffic because we also execute all GET requests twice.

The X-Mirrored header is set and checked to prevent an infinite loop of requests between the servers (guess how we found out).

Django integration

To instruct Django to store all media files to this CDN, we needed to implement a custom Storage class that we now ship on our hosted system. For reference, I put the current version of that implementation here.

Conclusion

This has been running in production for a couple of hours now and after playing around with it in staging and now in production, we like it so far. It’s fast, and it has the availability properties that we wanted.

If you want to build something like this yourself, better check back with us in a couple of weeks whether we still like it. ;) But on the other hand, that’s something we like about it as well: Unlike with a complicated object storage software, the data store behind this is just plain files on an ext4 filesystem. If we stop liking it, we can just copy it anywhere and serve the files with any web server – there is virtually no lock-in to any of the technology involved.

Of course, this approach is far from perfect. A few issues came to surface so far:

-

We did not need to use any complex distributed system and did not write any complex code – but of course the config we wrote is pretty complex. It’s still fine with haproxy, but the resulting nginx config leans heavily on the priority of the different kinds of

locationblocks and is non-trivial to understand or change. -

To my surprise, not every third-party code expects Django storage backends to alter the filenames of stored files. For example, our thumbnailing library failed completely at this point and we needed to replace it. This is also why we currently do not use the CDN to store static files (like CSS, JavaScript) that are generated at deploy time.

-

Once a file is fully synchronized, a user is still being bounced from one server to another, creating unnecessary internal traffic. Our use of caching within haproxy improves this at least slightly.

We’re happy that we no longer have a server that triggers a wake-up call when it goes down and we already ceremoniously rebootet the server we couldn’t reboot before.

With these blogposts, we hope to show you what we do to make the pretix.eu hosted service the most reliable way to use pretix, and at the same time the easiest one for you. If you’d like to sell tickets for your event with a ticket shop that doesn’t depend on a single server, just take a look here!